Deploy a cluster Kubernetes with CoreOS

en:Deploy a cluster Kubernetes with CoreOS

he:לפרוס את אשכול Kubernetes עם CoreOS

ru:Развертывание кластера Kubernetes с CoreOS

ja:クラスター コア Os と Kubernetes を展開します。

ar:نشر مجموعة كوبيرنيتيس مع CoreOS

zh:部署群集与 CoreOS Kubernetes

ro:Implementaţi un cluster Kubernetes cu CoreOS

pl:Wdróż klaster Kubernetes z CoreOS

de:Bereitstellen eines Clusters Kubernetes mit CoreOS

nl:Implementeer een cluster Kubernetes met CoreOS

it:Distribuire un cluster Kubernetes con CoreOS

pt:Implantar um cluster Kubernetes com CoreOS

es:Implementar un clúster Kubernetes con CoreOS

fr:Deployer un cluster Kubernetes avec CoreOS

This article has been created by an automatic translation software. You can view the article source here.

This procedure describes how to deploy quickly and simply a cluster Kubernetes multi-nodes with 3 instances CoreOS. Kubernetes work in client mode - Server, client Kubernetes is named "Kubernetes minion " and the server "Kubernetes master ". The Kubernetes Master instance is the instance that will orchestrate centrally instances Kubernetes Minion s. In our example, an instance CoreOS will play the role of Master Kubernetes and the other two instances will play the role of node Kubernetes (minion ).

Kubernetes is a system of open orchestration source created by Google for managing application container with Docker on a cluster of multiple hosts (3 VM CoreOS in our example ). It allows the deployment, maintenance, and scalability of applications. For more information you can go on github Kubernetes

We assume that your 3 CoreOS instances are already deployed, qu 'they can communicate with each other and that you are logged in ssh with the user core.

If this is not already done, update your CoreOS instances so that they are at least in version CoreOS 653.0.0 and include DCE 2 (see our FAQ Update CoreOS manually). In our case all our bodies are in stable CoreOS 681.2.0.

$ cat /etc/lsb-release

DISTRIB_ID=CoreOS

DISTRIB_RELEASE=681.2.0

DISTRIB_CODENAME="Red Dog"

DISTRIB_DESCRIPTION="CoreOS 681.2.0"

We must also ensure that all our CoreOS instances have an ID of different machine for proper operation context cluster. To simply delete the file /etc/machine-id and restart each of your instances CoreOS either :

$ sudo rm -f /etc/machine-id && sudo reboot

Kubernetes Master instance configuration :

Overwrite the file cloud - default config.yml to our Kubernetes Master configuration by performing the following commands (only on the instance which will act as the master and in the same order than below ) :

core@Kube-MASTER ~ $ sudo wget -O /usr/share/oem/cloud-config.yml http://mirror02.ikoula.com/priv/coreos/kubernetes-master.yaml

--2015-06-22 15:55:48-- http://mirror02.ikoula.com/priv/coreos/kubernetes-master.yaml

Resolving mirror02.ikoula.com... 80.93.X.X, 2a00:c70:1:80:93:81:178:1

Connecting to mirror02.ikoula.com|80.93.X.X|:80... connected.

HTTP request sent, awaiting response... 200 OK

Length: 8913 (8.7K) [text/plain]

Saving to: '/usr/share/oem/cloud-config.yml'

<!--T:11-->

/usr/share/oem/cloud-config.yml 100%[===================================================================================================>] 8.70K --.-KB/s in 0s

<!--T:12-->

2015-06-22 15:55:48 (148 MB/s) - '/usr/share/oem/cloud-config.yml' saved [8913/8913]

core@Kube-MASTER ~ $ export `cat /etc/environment`

core@Kube-MASTER ~ $ sudo sed -i 's#PRIVATE_IP#'$COREOS_PRIVATE_IPV4'#g' /usr/share/oem/cloud-config.yml

On redémarre notre instance afin que sa configuration either effective :

core@Kube-MASTER ~ $ sudo reboot

We check that our body is properly initialized :

core@Kube-MASTER ~ $ sudo fleetctl list-machines

MACHINE IP METADATA

aee19a88... 10.1.1.138 role=master

core@Kube-MASTER ~ $ sudo etcdctl ls --recursive

/coreos.com

/coreos.com/updateengine

/coreos.com/updateengine/rebootlock

/coreos.com/updateengine/rebootlock/semaphore

/coreos.com/network

/coreos.com/network/config

/coreos.com/network/subnets

/coreos.com/network/subnets/10.244.69.0-24

/registry

/registry/ranges

/registry/ranges/serviceips

/registry/ranges/servicenodeports

/registry/namespaces

/registry/namespaces/default

/registry/services

/registry/services/endpoints

/registry/services/endpoints/default

/registry/services/endpoints/default/kubernetes

/registry/services/endpoints/default/kubernetes-ro

/registry/services/specs

/registry/services/specs/default

/registry/services/specs/default/kubernetes

/registry/services/specs/default/kubernetes-ro

/registry/serviceaccounts

/registry/serviceaccounts/default

/registry/serviceaccounts/default/default

You can also see services /listen ports (There are server components /Master Kubernetes ):

core@Kube-MASTER ~ $ sudo netstat -taupen | grep LISTEN

tcp 0 0 10.1.1.138:7001 0.0.0.0:* LISTEN 232 16319 634/etcd2

tcp 0 0 10.1.1.138:7080 0.0.0.0:* LISTEN 0 19392 1047/kube-apiserver

tcp 0 0 0.0.0.0:5000 0.0.0.0:* LISTEN 0 19142 973/python

tcp 0 0 127.0.0.1:10251 0.0.0.0:* LISTEN 0 20047 1075/kube-scheduler

tcp 0 0 10.1.1.138:6443 0.0.0.0:* LISTEN 0 19406 1047/kube-apiserver

tcp 0 0 0.0.0.0:5355 0.0.0.0:* LISTEN 245 14794 502/systemd-resolve

tcp 0 0 127.0.0.1:10252 0.0.0.0:* LISTEN 0 19653 1058/kube-controlle

tcp 0 0 10.1.1.138:2380 0.0.0.0:* LISTEN 232 16313 634/etcd2

tcp6 0 0 :::8080 :::* LISTEN 0 19390 1047/kube-apiserver

tcp6 0 0 :::22 :::* LISTEN 0 13647 1/systemd

tcp6 0 0 :::4001 :::* LISTEN 232 16321 634/etcd2

tcp6 0 0 :::2379 :::* LISTEN 232 16320 634/etcd2

tcp6 0 0 :::5355 :::* LISTEN 245 14796 502/systemd-resolve

Configuration of instances Kubernetes Minion s :

Overwrite the file cloud - default config.yml to our Kubernetes Minion configuration by performing the following commands on all of your instance that will play the role of Kubernetes Minion (only on instances that will play the role node /minion et dans le même ordre d'exécution que celui ci-dessous) :

core@Kube-MINION1 ~ $ sudo wget -O /usr/share/oem/cloud-config.yml http://mirror02.ikoula.com/priv/coreos/kubernetes-minion.yaml

--2015-06-22 16:39:26-- http://mirror02.ikoula.com/priv/coreos/kubernetes-minion.yaml

Resolving mirror02.ikoula.com... 80.93.X.X, 2a00:c70:1:80:93:81:178:1

Connecting to mirror02.ikoula.com|80.93.X.X|:80... connected.

HTTP request sent, awaiting response... 200 OK

Length: 5210 (5.1K) [text/plain]

Saving to: '/usr/share/oem/cloud-config.yml'

<!--T:25-->

/usr/share/oem/cloud-config.yml 100%[===================================================================================================>] 5.09K --.-KB/s in 0s

<!--T:26-->

2015-06-22 16:39:26 (428 MB/s) - '/usr/share/oem/cloud-config.yml' saved [5210/5210]

core@Kube-MINION1 ~ $ export `cat /etc/environment`

Caution it is necessary to adapt the private ip address of your kubernetes master instance in the command below (replace 10.1.1.138 by the ip private of your kubernetes master instance ) the command below :

core@Kube-MINION1 ~ $ sudo sed -i 's#MASTER_PRIVATE_IP#10.1.1.138#g' /usr/share/oem/cloud-config.yml

Finally once you have perform these commands identically on each of your instances /nodes kubernetes minion , redémarrez celles-ci afin que leur configuration soient effectives et quelles joignent le cluster.

core@Kube-MINION1 ~ $ sudo reboot

We verify that our 2 instances kubernetes minion ont bien rejoint notre cluster (the command below peut être exécuté sur n'importe laquelle de vos instances membre de votre cluster) :

core@Kube-MASTER ~ $ sudo fleetctl list-machines

MACHINE IP METADATA

5097f972... 10.1.1.215 role=node

aee19a88... 10.1.1.138 role=master

fe86214c... 10.1.1.83 role=node

core@Kube-MINION1 ~ $ sudo etcdctl ls --recursive

/coreos.com

/coreos.com/updateengine

/coreos.com/updateengine/rebootlock

/coreos.com/updateengine/rebootlock/semaphore

/coreos.com/network

/coreos.com/network/config

/coreos.com/network/subnets

/coreos.com/network/subnets/10.244.69.0-24

/coreos.com/network/subnets/10.244.38.0-24

/coreos.com/network/subnets/10.244.23.0-24

/registry

/registry/ranges

/registry/ranges/serviceips

/registry/ranges/servicenodeports

/registry/namespaces

/registry/namespaces/default

/registry/services

/registry/services/specs

/registry/services/specs/default

/registry/services/specs/default/kubernetes

/registry/services/specs/default/kubernetes-ro

/registry/services/endpoints

/registry/services/endpoints/default

/registry/services/endpoints/default/kubernetes

/registry/services/endpoints/default/kubernetes-ro

/registry/serviceaccounts

/registry/serviceaccounts/default

/registry/serviceaccounts/default/default

/registry/events

/registry/events/default

/registry/events/default/10.1.1.215.13ea16c9c70924f4

/registry/events/default/10.1.1.83.13ea16f74bd4de1c

/registry/events/default/10.1.1.83.13ea16f77a4e7ab2

/registry/events/default/10.1.1.215.13ea16c991a4ee57

/registry/minions

/registry/minions/10.1.1.215

/registry/minions/10.1.1.83

core@Kube-MINION2 ~ $ sudo etcdctl ls --recursive

/coreos.com

/coreos.com/updateengine

/coreos.com/updateengine/rebootlock

/coreos.com/updateengine/rebootlock/semaphore

/coreos.com/network

/coreos.com/network/config

/coreos.com/network/subnets

/coreos.com/network/subnets/10.244.69.0-24

/coreos.com/network/subnets/10.244.38.0-24

/coreos.com/network/subnets/10.244.23.0-24

/registry

/registry/ranges

/registry/ranges/serviceips

/registry/ranges/servicenodeports

/registry/namespaces

/registry/namespaces/default

/registry/services

/registry/services/specs

/registry/services/specs/default

/registry/services/specs/default/kubernetes

/registry/services/specs/default/kubernetes-ro

/registry/services/endpoints

/registry/services/endpoints/default

/registry/services/endpoints/default/kubernetes

/registry/services/endpoints/default/kubernetes-ro

/registry/serviceaccounts

/registry/serviceaccounts/default

/registry/serviceaccounts/default/default

/registry/events

/registry/events/default

/registry/events/default/10.1.1.83.13ea16f77a4e7ab2

/registry/events/default/10.1.1.215.13ea16c991a4ee57

/registry/events/default/10.1.1.215.13ea16c9c70924f4

/registry/events/default/10.1.1.83.13ea16f74bd4de1c

/registry/minions

/registry/minions/10.1.1.215

/registry/minions/10.1.1.83

On our instances Kubernetes Minion here services /listen ports (including the Kubelet service by which the exchange of information is done with the master Kubernetes ) :

core@Kube-MINION1 ~ $ sudo netstat -taupen | grep LISTEN

tcp 0 0 127.0.0.1:10249 0.0.0.0:* LISTEN 0 18280 849/kube-proxy

tcp 0 0 0.0.0.0:5355 0.0.0.0:* LISTEN 245 14843 500/systemd-resolve

tcp6 0 0 :::49005 :::* LISTEN 0 18284 849/kube-proxy

tcp6 0 0 :::10255 :::* LISTEN 0 19213 1025/kubelet

tcp6 0 0 :::47666 :::* LISTEN 0 18309 849/kube-proxy

tcp6 0 0 :::22 :::* LISTEN 0 13669 1/systemd

tcp6 0 0 :::4001 :::* LISTEN 232 16106 617/etcd2

tcp6 0 0 :::4194 :::* LISTEN 0 19096 1025/kubelet

tcp6 0 0 :::10248 :::* LISTEN 0 19210 1025/kubelet

tcp6 0 0 :::10250 :::* LISTEN 0 19305 1025/kubelet

tcp6 0 0 :::2379 :::* LISTEN 232 16105 617/etcd2

tcp6 0 0 :::5355 :::* LISTEN 245 14845 500/systemd-resolve

Verification of communication with the Master Kubernetes API :

The UI Kubernetes :

To access the dashboard Kubernetes, you need to allow connections to port 8080 (Kubernetes master API server) and carried out a port if required forwarding (forward area ) port 8080 de votre instance Kubernetes master . Ensuite, il vous suffit d'accéder à l'url http://adresse_ip_publique_instance_kubernetes_master:8080/static/app/#/dashboard/ in your browser :

Since this dashboard, you are among other possible to display information on your nodes (Kubernetes Minion ). For this, you can click on "Views " :

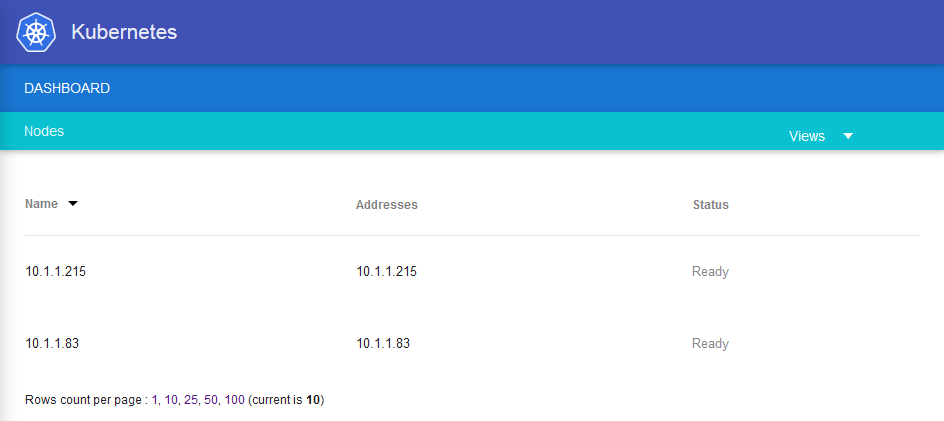

Click on "Nodes " :

La liste de vos nodes Kubernetes minion apparait :

Click on l'un d'eux pour afficher les informations relatives à ce node (version of Docker, system, KubeProxy, and Kubelet, etc. |) :

Kubernetes CLI :

You can also use the tools Kubectl deThen votre instance Kubernetes master . Pour se faire, vous devez installer cette utilitaire comme suit :

Une fois connecté en ssh sur votre instance Kubernetes master saisissez les commandes suivantes :

core@Kube-MASTER ~ $ sudo wget -O /opt/bin/kubectl https://storage.googleapis.com/kubernetes-release/release/v0.17.0/bin/linux/amd64/kubectl

--2015-06-23 11:39:09-- https://storage.googleapis.com/kubernetes-release/release/v0.17.0/bin/linux/amd64/kubectl

Resolving storage.googleapis.com... 64.233.166.128, 2a00:1450:400c:c09::80

Connecting to storage.googleapis.com|64.233.166.128|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 20077224 (19M) [application/octet-stream]

Saving to: '/opt/bin/kubectl'

<!--T:54-->

/opt/bin/kubectl 100%[===================================================================================================>] 19.15M 1.18MB/s in 16s

<!--T:55-->

2015-06-23 11:39:26 (1.18 MB/s) - '/opt/bin/kubectl' saved [20077224/20077224]

core@Kube-MASTER ~ $ sudo chmod 755 /opt/bin/kubectl

Test of good communication with your API Kubernetes :

core@Kube-MASTER ~ $ kubectl get node

NAME LABELS STATUS

10.1.1.215 kubernetes.io/hostname=10.1.1.215 Ready

10.1.1.83 kubernetes.io/hostname=10.1.1.83 Ready

core@Kube-MASTER ~ $ kubectl cluster-info

Kubernetes master is running at http://localhost:8080

We can thus deploy a first container Nginx in our cluster :

core@Kube-MASTER ~ $ kubectl run-container nginx --image=nginx

CONTROLLER CONTAINER(S) IMAGE(S) SELECTOR REPLICAS

nginx nginx nginx run-container=nginx 1

Then we can or even on which our hosts this container is deployed, the name of the pod and the ip which was was affected :

core@Kube-MASTER ~ $ kubectl get pods

POD IP CONTAINER(S) IMAGE(S) HOST LABELS STATUS CREATED MESSAGE

nginx-zia71 10.244.38.2 10.1.1.215/10.1.1.215 run-container=nginx Running 3 minutes

nginx nginx Running 1 minutes

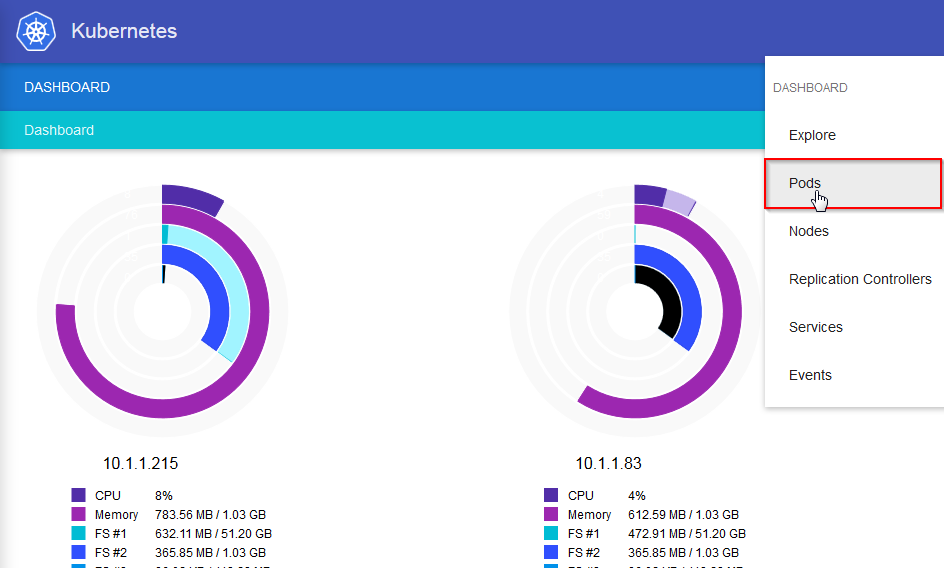

We find this same information via the Kubernete UI dashboard by going in "Views " Then "Pods " :

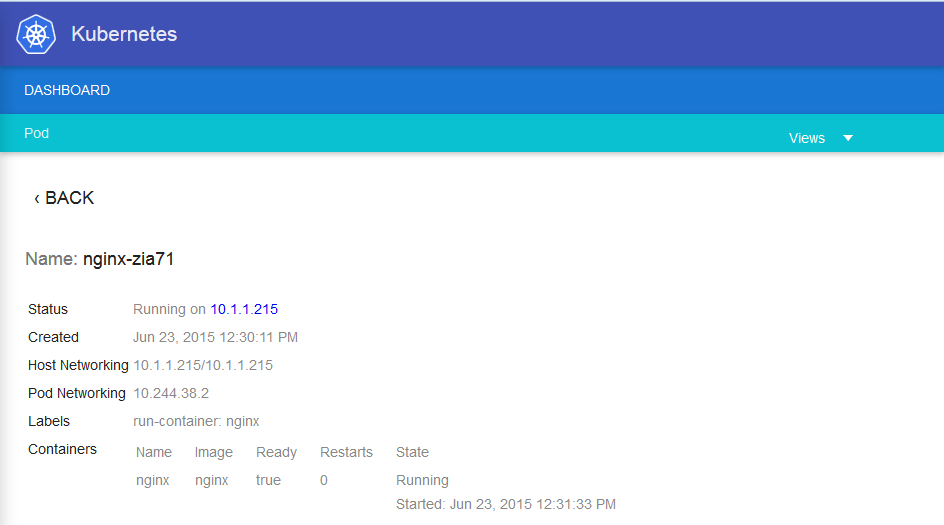

We can see the ip of the host on which this container deployed, with the name of the Pod and its ip address :

Clicking on it gets more details on our Pod :

We can stop our container simply :

core@Kube-MASTER ~ $ kubectl stop rc nginx

replicationcontrollers/nginx

We can also deploy our container with 2 replicas :

core@Kube-MASTER ~ $ kubectl run-container nginx --image=nginx --replicas=2

CONTROLLER CONTAINER(S) IMAGE(S) SELECTOR REPLICAS

nginx nginx nginx run-container=nginx 2

core@Kube-MASTER ~ $ kubectl get pods

POD IP CONTAINER(S) IMAGE(S) HOST LABELS STATUS CREATED MESSAGE

nginx-7gen5 10.244.38.3 10.1.1.215/10.1.1.215 run-container=nginx Running About a minute

nginx nginx Running 39 seconds

nginx-w4xue 10.244.23.3 10.1.1.83/10.1.1.83 run-container=nginx Running About a minute

nginx nginx Running About a minute

For more information, on the architecture, components and operation of a cluster Kubernetes we invite you to read the official documentation "Kubernetes architecture" :

Enable comment auto-refresher